Linear Regression with Math.NET Numerics

Likely the most requested feature for Math.NET Numerics is support for some form of regression, or fitting data to a curve. I'll show in this article how you can easily compute regressions manually using Math.NET, until we support it out of the box. We already have broad interpolation support, but interpolation is about fitting some curve exactly through a given set of data points and therefore an entirely different problem.

For a regression there are usually much more data points available than curve parameters, so we want to find the parameters that produce the lowest errors on the provided data points, according to some error metric.

Least Squares Linear Regression

If the curve is linear in its parameters, then we're speaking of linear regression. The problem becomes much simpler and we can leverage the rich linear algebra toolset to find the best parameters, especially if we want to minimize the square of the errors (least squares metric).

In the general case such a curve would be in the form of a linear combination of \(N\) arbitrary but known functions \(f_i(x)\), scaled by the parameters \(p_i\). Note that none of the functions \(f_i\) depends on any of the \(p_i\) parameters.

\[y : x \mapsto p_1 f_1(x) + p_2 f_2(x) + \cdots + p_N f_N(x)\]

If we have \(M\) data points \((x_j,y_j)\), then we can write the whole problem as an overdefined system of \(M\) equations:

\[\begin{eqnarray} y_1 &=& p_1 f_1(x_1) + p_2 f_2(x_1) + \cdots + p_N f_N(x_1) \\ y_2 &=& p_1 f_1(x_2) + p_2 f_2(x_2) + \cdots + p_N f_N(x_2) \\ &\vdots& \\ y_M &=& p_1 f_1(x_M) + p_2 f_2(x_M) + \cdots + p_N f_N(x_M) \end{eqnarray}\]

Or in matrix notation:

\[\begin{eqnarray} \mathbf y &=& \mathbf X \mathbf p \\ \begin{bmatrix}y_1\\y_2\\ \vdots \\y_M\end{bmatrix} &=& \begin{bmatrix}f_1(x_1) & f_2(x_1) & \cdots & f_N(x_1)\\f_1(x_2) & f_2(x_2) & \cdots & f_N(x_2)\\ \vdots & \vdots & \ddots & \vdots\\f_1(x_M) & f_2(x_M) & \cdots & f_N(x_M)\end{bmatrix} \begin{bmatrix}p_1\\p_2\\ \vdots \\p_N\end{bmatrix} \end{eqnarray}\]

This is a standard least squares problem and can easily be solved using Math.NET Numerics's linear algebra classes and the QR decomposition. In literature you'll usually find algorithms explicitly computing some form of matrix inversion. While symbolically correct, using the QR decomposition instead is numerically more robust. This is a solved problem, after all.

1:

|

|

Some \(\mathbf{X}\) matrices of this form have well known names, for example the Vandermonde-Matrix for fitting to a polynomial.

Example: Fitting to a Line

A line can be parametrized by the height \(a\) at \(x=0\) and its slope \(b\):

\[y : x \mapsto a + b x\]

This maps to the general case with \(N=2\) parameters as follows:

\[p_1 = a, f_1 : x \mapsto 1 \\ p_2 = b, f_2 : x \mapsto x\]

And therefore the equation system

\[\begin{bmatrix}y_1\\y_2\\ \vdots \\y_M\end{bmatrix} = \begin{bmatrix}1 & x_1\\1 & x_2\\ \vdots & \vdots\\1 & x_M\end{bmatrix} \begin{bmatrix}a\\b\end{bmatrix}\]

The complete code when using Math.NET Numerics would look like this:

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: |

|

Example: Fitting to an arbitrary linear function

The functions \(f_i(x)\) do not have to be linear in \(x\) at all to work with linear regression, as long as the resulting function \(y(x)\) remains linear in the parameters \(p_i\). In fact, we can use arbitrary functions, as long as they are defined at all our data points \(x_j\). For example, let's compute the regression to the following complicated function including the Digamma function \(\psi(x)\), sometimes also known as Psi function:

\[y : x \mapsto a \sqrt{\exp x} + b \psi(x^2)\]

The resulting equation system in Matrix form:

\[\begin{bmatrix}y_1\\y_2\\ \vdots \\y_M\end{bmatrix} = \begin{bmatrix}\sqrt{\exp{x_1}} & \psi(x_1^2)\\\sqrt{\exp{x_2}} & \psi(x_2^2)\\ \vdots & \vdots\\\sqrt{\exp{x_M}} & \psi(x_M^2)\end{bmatrix} \begin{bmatrix}a\\b\end{bmatrix}\]

The complete code with Math.NET Numerics, but this time with F#:

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: 18: 19: 20: |

|

Note that we use the Math.NET Numerics F# package

here (e.g. for the vector function).

Example: Fitting to a Sine

Just like the digamma function we can also target a sine curve. However, to make it more interesting, we're also looking for phase shift and frequency parameters:

\[y : x \mapsto a + b \sin(c + \omega x)\]

Unfortunately the function \(f_2 : x \mapsto \sin(c + \omega x)\) now depends on parameters \(c\) and \(\omega\) which is not allowed in linear regression. Indeed, fitting to a frequency \(\omega\) in a linear way is not trivial if possible at all, but for a fixed \(\omega\) we can leverage the following trigonometric identity:

\[\begin{eqnarray} a+b\sin(c + \omega x) &=& a+u\sin{\omega x}+v\cos{\omega x} \\ b &=& \sqrt{u^2+v^2} \\ c &=& \operatorname{atan2}(v,u) \end{eqnarray}\]

and therefore

\[\begin{bmatrix}y_1\\y_2\\ \vdots \\y_M\end{bmatrix} = \begin{bmatrix}1 & \sin \omega x_1 & \cos \omega x_1\\1 & \sin \omega x_2 & \cos \omega x_2\\ \vdots & \vdots & \vdots\\1 & \sin \omega x_M & \cos \omega x_M\end{bmatrix} \begin{bmatrix}a\\u\\v\end{bmatrix}\]

However, note that because of the non-linear transformation on the \(b\) and \(c\) parameters, the result will no longer be strictly the least square error solution. While our result would be good enough for some scenarios, we'd either need to compensate or switch to non-linear regression if we need the actual least square error parameters.

The complete code in C# with Math.NET Numerics would look like this:

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: 18: 19: |

|

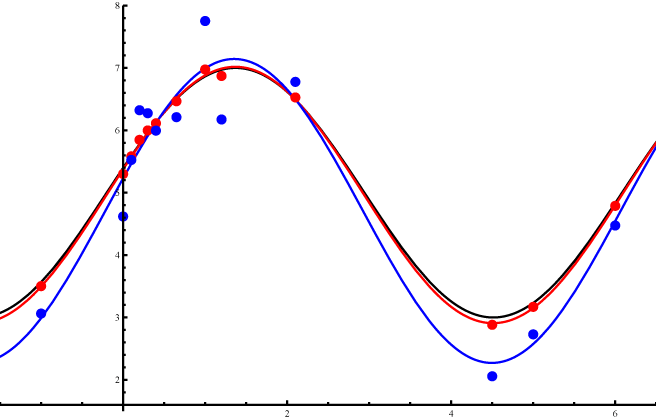

The following graph visualizes the resulting regressions. The curve we computed the \(y\) values from, before adding the strong noise, is shown in black. The red dots show the actual data points with only small noise, the blue dots the points with much stronger noise added. The red and blue curves then show the actual computed regressions for each.

Full name: linearregressionmathnetnumerics.f1

Full name: linearregressionmathnetnumerics.f2

Full name: linearregressionmathnetnumerics.fy

Full name: linearregressionmathnetnumerics.xdata

Full name: linearregressionmathnetnumerics.ydata

module List

from Microsoft.FSharp.Collections

--------------------

type List<'T> =

| ( [] )

| ( :: ) of Head: 'T * Tail: 'T list

interface IEnumerable

interface IEnumerable<'T>

member GetSlice : startIndex:int option * endIndex:int option -> 'T list

member Head : 'T

member IsEmpty : bool

member Item : index:int -> 'T with get

member Length : int

member Tail : 'T list

static member Cons : head:'T * tail:'T list -> 'T list

static member Empty : 'T list

Full name: Microsoft.FSharp.Collections.List<_>

Full name: Microsoft.FSharp.Collections.List.map

Full name: linearregressionmathnetnumerics.X

Full name: linearregressionmathnetnumerics.y

Full name: linearregressionmathnetnumerics.p

Full name: linearregressionmathnetnumerics.a

Full name: linearregressionmathnetnumerics.b